How to project detected lanes from images into ground plane in ROS2

Learning Goals / Tasks

- You will learn to create simple ROS2 nodes and use custom msgs

- You will learn how to utilize OpenCVs Mat datastructure and built-in functions to project imagepoints to a ground plane

- You will learn the meaning of some camera parameters and necessary mathematical operations for imagepoint to worldpoint backprojection

- You will learn a good way to visualize points that are (or should be) on a curve or straight line

Prerequisites

- ADE (Autoware)

- Pull the template ROS2 workspace containing the prepared packages

- In ADE (or wherever you work) you will need

libopencv-dev(Install withsudo apt-get install libopencv-dev) - Knowledgebase: I expect you watched the previous courses and understand subscribing, publishing, messages and of course mediocre knowledge of c++ development

Source location

The source code is located at:

https://gitlab.com/ApexAI/autowareclass2020/-/tree/master/code/src/08_Perception_Camera

There you will find the workspace to work on and the solution workspace. You can chose wether to make your hands dirty or just check out the solution. Anyway if you want to run it you need to have pulled the autoware course to an environment where you can build ROS2 nodes.

Source install

cd ~

git clone https://gitee.com/knowmefly/camera-hands-on-ws.git

cd ~/camera-hands-on-ws/src/lane_detection_data_loader/resources/

wget http://www.aigrantli.com:8081/directlink/book/ROS2/Autoware%20Demo/videos/laneVideo.h264

ade start

ade enter

# 编译

cd ~/camera-hands-on-ws/

colcon build

# 运行节点

# terminal 1

cd ~/camera-hands-on-ws/

source install/setup.bash

ros2 run lane_detection_data_loader lane_detection_data_loader_node

# terminal 2

cd ~/camera-hands-on-ws/

source install/setup.bash

ros2 run lane_detection_projection lane_detection_projection_node

# terminal 3

cd ~/camera-hands-on-ws/

source install/setup.bash

ros2 run lane_detection_visualization lane_detection_visualization_node

# terminal 4

cd ~/camera-hands-on-ws/

source install/setup.bash

rviz2

以上指令可以通过launch文件调用:

mkdir -p /home/grantli/camera-hands-on-ws/install/lane_detection_visualization/share/lane_detection_visualization/launch

gedit /home/grantli/camera-hands-on-ws/install/lane_detection_visualization/share/lane_detection_visualization/launch/lane_detection_visualization.launch.py

添加下面内容:

import os

from ament_index_python import get_package_share_directory

import launch.substitutions

from launch_ros.actions import Node

from launch.actions import Shutdown

from launch.actions import DeclareLaunchArgument, ExecuteProcess, TimerAction

from launch.conditions import IfCondition

from launch.substitutions import LaunchConfiguration, PythonExpression

def get_param_file(package_name, file_name):

"""Pass the given param file as a LaunchConfiguration."""

file_path = os.path.join(

get_package_share_directory(package_name),

'param',

file_name)

return launch.substitutions.LaunchConfiguration(

'params', default=[file_path])

def generate_launch_description():

lane_detection_node = Node(

executable='lane_detection_data_loader_node',

name='lane_detection',

on_exit=Shutdown(),

package='lane_detection_data_loader'

)

lane_detection_projection_node = Node(

executable='lane_detection_projection_node',

name='lane_detection_projection',

on_exit=Shutdown(),

package='lane_detection_projection'

)

lane_detection_visualization_node = Node(

executable='lane_detection_visualization_node',

name='lane_detection_visualization',

on_exit=Shutdown(),

package='lane_detection_visualization'

)

run_rviz2 = ExecuteProcess(

cmd=[[

'rviz2'

]],

shell=True

)

return launch.LaunchDescription([

lane_detection_node,

# point_cloud_filter_transform_node,

lane_detection_projection_node,

lane_detection_visualization_node,

TimerAction(

period=2.0,

actions=[run_rviz2],

)

])

保存并启动:

ade enter

cd ~/camera-hands-on-ws

source install/setup.bash

ros2 launch lane_detection_visualization lane_detection_visualization.launch.py

Demo

Introduction

To enable autonomous driving many competitors use some kind of lane detection algorithm. Whether it’s a neural network or a series of smart image processing steps, in the end, most of them serve one purpose: to give us information on the position of lanemarkings (almost always in the image plane) which separate the individual driveable lanes on the street.

Image coordinates alone can’t give you enough information to safely make decisions on where the lane really is and how it is going to curve in what distance. For that you need to backproject the points into the world. This hands-on exercise is an attempt to make the theory behind the backprojection tangible and practical for the students of the autoware course.

How is this exercise gonna work and how was it made?

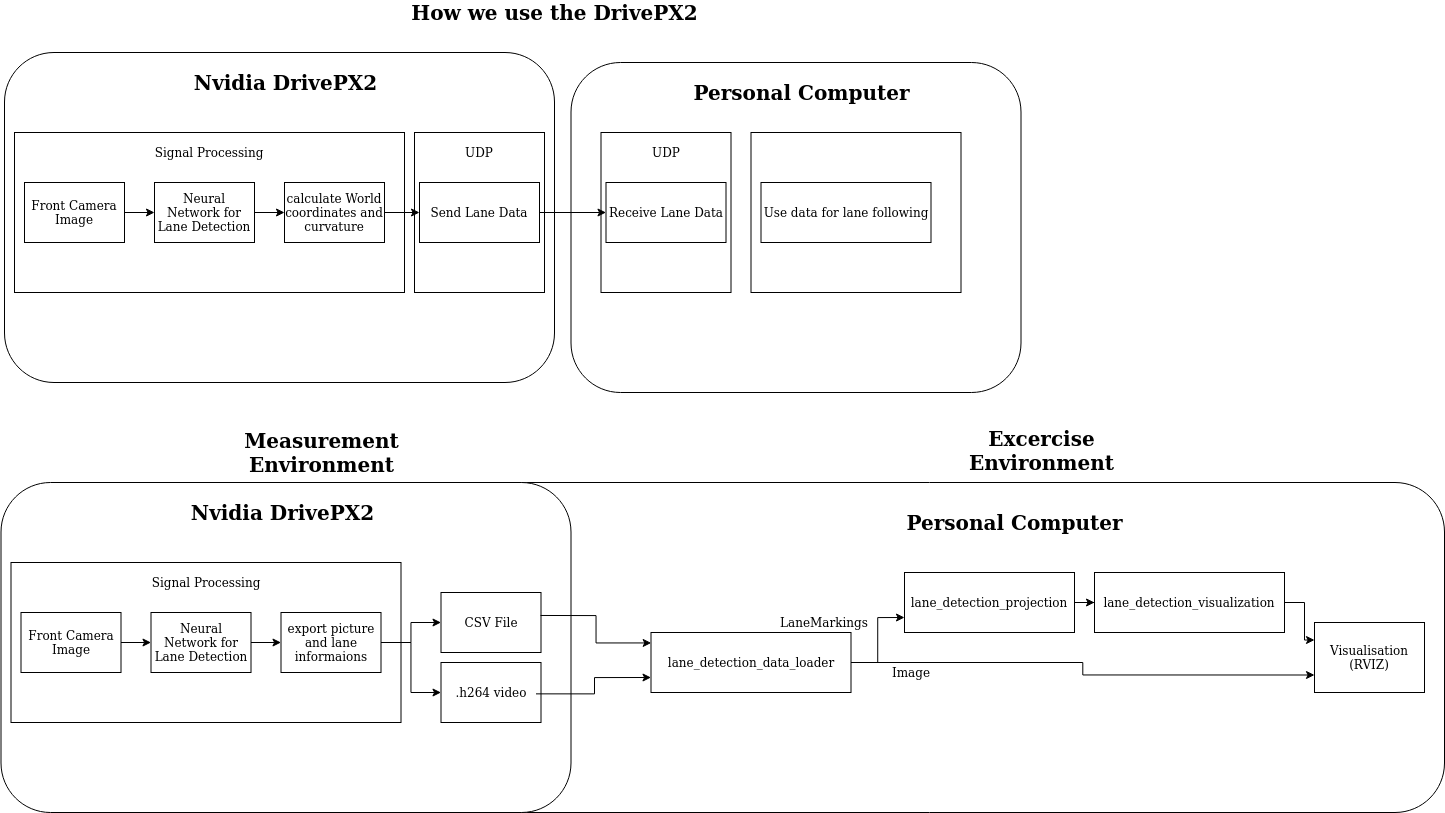

Graphical overview

NVIDIA Drive PX2 data generation

The Drive PX2 Platform served the purpose of extracting the detected lanes. NVIDIA uses a neural network called Lanenet to detect the pixelcoordinates of lane-separating lanemarkings. For this exercise the Lanenet results for one of our recorded drives have been serialized in a .csv file to be later read in our lane_detection_data_loader node.

lane_detection_data_loader

This node deserializes the .csv file generated by Lanenet and publishes the lane information synchronously to the video frames of the video used in the generation of the lanemarking data.

Source tree:

├── CMakeLists.txt

├── include

│ └── lane_detection_data_loader

│ └── tinylib.h -> this is just a header with some helper functions

├── package.xml

├── resources

│ ├── laneMarks.csv -> this is the the csv file with the lanemarking coordinates

│ └── laneVideoScaled.h264 -> this is the video of the lanenetresult

└── src

└── lane_detection_data_loader.cpp -> this is the source code for the node containing the deserialization of the csv file and video import into ros

lane_detection_projection

Here, you will have to write most of the code. This node is supposed to subscribe the published lane coordinates by the lane_detection_data_loader and publish the projected lane markings for later visualization in lane_detection_visualization.

Source tree:

├── CMakeLists.txt

├── include

│ └── lane_detection_projection

│ └── matrixDefines.h -> in this file you will find the predefined intrinsic matrix, rotation matrix and transform vector

├── package.xml

└── src

└── lane_detection_projection.cpp -> here most of the exercise will be coded

lane_detection_visualization

For visualization one option is to use the functionality of rviz. The standardized visualisation_msgs can be made use of for simple visualizations. This is exactly what is supposed to happen in this node.

Source tree:

├── CMakeLists.txt

├── include

│ └── lane_detection_visualization

├── package.xml

└── src

└── lane_detection_visualization.cpp -> this contains the main source for rviz visualization

lane_msgs

The lane_msgs package contains the definition of the custom ros message types used in the exercise.

LaneMarking.msg - contains u and v imagecoordinates

LaneMarkingArray.msg - contains a vector of LaneMarking

LaneMarkingArrayBoth.msg - contains a vector of the LaneMarking representing the nearest left lane-separating line and a vector of LaneMarking for the nearest right one

LaneMarkingProjected.msg - contains x, y, z coordinates of a projected point

LaneMarkingProjectedArray.msg - contains a vector of LaneMarkingProjected

LaneMarkingProjectedArrayBoth.msg - contains a vector of the LaneMarkingProjected representing the nearest left lane-separating line and a vector of LaneMarkingProjected for the nearest right one

Extra knowledge

Here is some specialized information you might find helpful when working on the exercise.

short OpenCV excourse

undistortPoints

cv::undistortPoints - this function executes the first step we need to backproject imagepoints. It undistorts a sparse set of 2D points, i. e. it multiplies this set by the inverse of our intrinsic matrix and returns a set of undistorted points

Datastructures

cv::Mat - this is a general container for all types of matrices in OpenCV. It has predefined behaviors with the most important operators such as the “*” operator.

You can get the inverse of a cv::Mat - if the contained matrix is squared (M=N) - by calling .inv() on it.

cv::Mat_<datatype> - is an extension of cv::Mat with which you can specify the datatype of the contained information.

Accessing the data of a cv::Mat looks similar. To access the second value of a cv::Mat that you expect to be of type “float”, you call some_matrix.at<float>(1).

cv::Point2f - this is a container for a 2D point. It is recommended to use this when representing the imagepoints you pass to cv::undistortPoints.

cv::MatIterator_<datatype> - with this you can iterate from the beginning of a matrix (some_matrix.begin<datatype>()) to the end of a matrix (some_matrix.end<datatype>()).

cv::noArray() - can be used to fill a parameter with an empty matrix if values are not given.

short Debugging excourse

You can debug your c++ source with gdb. I recommend using the graphical user interface based on gdb called ddd. To install the debugger in your environment type sudo apt-get install ddd in your terminal.

To be able to debug, you will need to call the executable with the debugger after having built it with debug symbols.

To build with debug symbols in ros2 you can type the command colcon build --cmake-args -DCMAKE_BUILD_TYPE=Debug in your workspace directory.

To call the executable with the debugger you can use the “prefix” argument when running a node in ros2. Like this: ros2 run --prefix=ddd <package_name> <executable_name>

short rviz2 configuration excourse

If you can’t see anything in rviz you probably need to select the right topics first. For that you need to click the add button in the UI and go to “By topic”. There you should see the topics you can visualize in rviz. If lane_detection_data_loader is running you should be able to see a topic of type “Image”. You can select this to see the video frames. All nodes are running you should see a topic of type “Marker” too.

The actual exercise

Now to write the code.

Note: you are expected to mainly only modify the files: lane_detection_projection.cpp and lane_detection_visualization.cpp. Solutions at the end.

Task 1: build the Data Pipeline

First of all you should create the subscription/subscription callbacks and publishers to get the data to the right places.

To achieve this

In lane_detection_projection.cpp:

Define a subscription and a publisher.

The subscription should be of type lane_msgs::msg::LaneMarkingArrayBoth and the topic to subscribe is /lane_markings_left_right. You will also need to define a callback function with isgnature void foo(lane_msgs::msg::LaneMarkingArrayBoth::SharedPtr bar).

The publisher should be of type lane_msgs::msg::LaneMarkingProjectedArrayBoth and can publish to any topic but I recommend using /lane_markings_left_right_projected.

In lane_detection_visualisation.cpp:

Define a subscription and a publisher.

The subscription should be of type lane_msgs::msg::LaneMarkingProjectedArrayBoth and the topic to subscribe is /lane_markings_left_right_projected. You will also need to define a callback function with isgnature void foo(lane_msgs::msg::LaneMarkingProjectedArrayBoth::SharedPtr bar).

The publisher should be of type visualization_msgs::msg::Marker and can publish to any topic but I recommend using /visual_feedback.

Task 2: work with the Data (OpenCV)

Next you can do the backprojection.

In the callback defined in Task 1 in lane_detection_projection.cpp:

Define message for the resulting projected lanes

For each lane:

- Check if lane is empty

- Create container cv::Mat and destination cv::Mat in which undistorted points will be stored

- Fill the container with points from lane cv::Mat

- Call the cv::undistortPoints function

For each resulting point:

- Create matrix cv::Mat from undistorted point, make it homogeneous

- Multiply with inverse of rotation matrix

- Normalize the homogeneous resulting vector

- Scale ray to the propotion of z-transform-coordinate to put it in the xy-plane (i.e. the ground) and apply transformation of transform vector

- Store projected point in appropriate message type

- Add point to vector of projected points for the left lane in the result message

Publish the resulting combined vectors.

Note: it is possible to execute some of the operations in different order and still get the same result.

Task 3: Visualization in Rviz

Finally to visualize, in the callback in lane_detection_visualisation.cpp:

For each lane:

- Create marker of type LINE_STRIP

- Fill the “points” field in the marker with the coordinates of received projected points of that lane

Publish the markers. And you are done.

Bonus task: Put a car in the scene

In resources in the lane_detection_visualization package you can put an .stl .mesh or .dae file. With the Marker message you can then publish the mesh in the file to Rviz. You already have a royalty free model of a Porsche 911. Try it out!

Solution with explanation

In this part we are going to look at the finished code. We will also explain some of the theoretical concepts.

First lets take a look at lane_detection_projection.cpp:

#define ubyte unsigned char

#define uword unsigned short int

#define BOOST_BIND_NO_PLACEHOLDERS

#include <chrono>

#include <memory>

#include <opencv2/opencv.hpp>

#include "lane_detection_projection/matrixDefines.h"

#include "lane_msgs/msg/lane_marking.hpp"

#include "lane_msgs/msg/lane_marking_array_both.hpp"

#include "lane_msgs/msg/lane_marking_projected.hpp"

#include "lane_msgs/msg/lane_marking_projected_array_both.hpp"

#include <stdio.h>

#include <stdlib.h>

#include "rclcpp/rclcpp.hpp"

#include <vector>

using std::placeholders::_1;

class lane_detection_projection : public rclcpp::Node

{

public:

lane_detection_projection()

: Node("lane_detection_projection")

{

pub_projected_markings = create_publisher<lane_msgs::msg::LaneMarkingProjectedArrayBoth>("/lane_markings_left_right_projected", 10);

sub_markings = create_subscription<lane_msgs::msg::LaneMarkingArrayBoth>("/lane_markings_left_right", 10, std::bind(&lane_detection_projection::laneMarkingCallback, this, _1));

}

void laneMarkingCallback(lane_msgs::msg::LaneMarkingArrayBoth::SharedPtr msg)

{

lane_msgs::msg::LaneMarkingProjectedArrayBoth combined_lanes;

if (msg->markings_left.size() > 0)

{

cv::Mat_<cv::Point2f> leftLanePointMatrix(1, msg->markings_left.size());

cv::Mat undistortedLeftLanePointMatrixNormalised;

for (int i = 0; i < msg->markings_left.size(); i++)

{

leftLanePointMatrix(i) = cv::Point2f(msg->markings_left[i].u, msg->markings_left[i].v);

}

undistortPoints(leftLanePointMatrix, undistortedLeftLanePointMatrixNormalised, intrinsic_calibration_matrix, cv::noArray());

for (cv::MatIterator_<cv::Point2f> i = undistortedLeftLanePointMatrixNormalised.begin<cv::Point2f>(); i != undistortedLeftLanePointMatrixNormalised.end<cv::Point2f>(); ++i)

{

float norm[] = {(*i).x, (*i).y, 1};

cv::Mat rotated_ray = cv::Mat_<float>(1, 3, norm) * rotation_matrix.inv();

cv::Mat rotated_normalised_ray = rotated_ray / rotated_ray.at<float>(2);

float xGround = transform_matrix.at<float>(2) * rotated_normalised_ray.at<float>(0) + transform_matrix.at<float>(0);

float yGround = transform_matrix.at<float>(2) * rotated_normalised_ray.at<float>(1) + transform_matrix.at<float>(1);

lane_msgs::msg::LaneMarkingProjected lmp;

lmp.x = xGround;

lmp.y = yGround;

lmp.z = 0;

combined_lanes.markings_left.push_back(lmp);

}

}

if (msg->markings_right.size() > 0)

{

cv::Mat_<cv::Point2f> rightLanePointMatrix(1, msg->markings_right.size());

cv::Mat undistortedRightLanePointMatrixNormalised;

for (int i = 0; i < msg->markings_right.size(); i++)

{

rightLanePointMatrix(i) = cv::Point2f(msg->markings_right[i].u, msg->markings_right[i].v);

}

undistortPoints(rightLanePointMatrix, undistortedRightLanePointMatrixNormalised, intrinsic_calibration_matrix, cv::noArray());

for (cv::MatIterator_<cv::Point2f> i = undistortedRightLanePointMatrixNormalised.begin<cv::Point2f>(); i != undistortedRightLanePointMatrixNormalised.end<cv::Point2f>(); ++i)

{

float norm[] = {(*i).x, (*i).y, 1};

cv::Mat rotated_ray = cv::Mat_<float>(1, 3, norm) * rotation_matrix.inv();

cv::Mat rotated_normalised_ray = rotated_ray / rotated_ray.at<float>(2);

float xGround = transform_matrix.at<float>(2) * rotated_normalised_ray.at<float>(0) + transform_matrix.at<float>(0);

float yGround = transform_matrix.at<float>(2) * rotated_normalised_ray.at<float>(1) + transform_matrix.at<float>(1);

lane_msgs::msg::LaneMarkingProjected lmp;

lmp.x = -xGround;

lmp.y = yGround;

lmp.z = 0;

combined_lanes.markings_right.push_back(lmp);

}

}

pub_projected_markings->publish(combined_lanes);

}

private:

std::shared_ptr<rclcpp::Publisher<lane_msgs::msg::LaneMarkingProjectedArrayBoth>> pub_projected_markings;

std::shared_ptr<rclcpp::Subscription<lane_msgs::msg::LaneMarkingArrayBoth>> sub_markings;

};

int main(int argc, char *argv[])

{

rclcpp::init(argc, argv);

auto node = std::make_shared<lane_detection_projection>();

rclcpp::Rate loop_rate(1/100.0);

while (rclcpp::ok())

{

rclcpp::spin_some(node);

}

return 0;

}

Task 1 solution (lane_detection_projection.cpp)

All the lines of code relevant to task 1 are here:

pub_projected_markings = create_publisher<lane_msgs::msg::LaneMarkingProjectedArrayBoth>("/lane_markings_left_right_projected", 10);

sub_markings = create_subscription<lane_msgs::msg::LaneMarkingArrayBoth>("/lane_markings_left_right", 10, std::bind(&lane_detection_projection::laneMarkingCallback, this, _1));

...

void laneMarkingCallback(lane_msgs::msg::LaneMarkingArrayBoth::SharedPtr msg)

...

std::shared_ptr<rclcpp::Publisher<lane_msgs::msg::LaneMarkingProjectedArrayBoth>> pub_projected_markings;

std::shared_ptr<rclcpp::Subscription<lane_msgs::msg::LaneMarkingArrayBoth>> sub_markings;

The first few lines are from the constructor, the middle one is how the callback is supposed to look like and the last ones are the definitions of publisher and subscription.

Because these are pretty much self explanatory. I will skip this part when dissecting lane_detection_visualisation.cpp.

Task 2 solution (lane_detection_projection.cpp)

Now for task 2. The first subtask was to define the message for the projected results:

lane_msgs::msg::LaneMarkingProjectedArrayBoth combined_lanes;

LaneMarkingProjectedArrayBoth is chosen here because we can store the results of both lanes in one message.

Then we go and loop through the points of the left lane. In the first few lines the matrix with the 2D points is filled with the points passed in the msg parameter of the callback.

cv::Mat_<cv::Point2f> leftLanePointMatrix(1, msg->markings_left.size());

cv::Mat undistortedLeftLanePointMatrixNormalised;

for (int i = 0; i < msg->markings_left.size(); i++)

{

leftLanePointMatrix(i) = cv::Point2f(msg->markings_left[i].u,msg->markings_left[i].v);

}

After that we call the undistorPoints function from OpenCV. It expects at least a matrix filled with the distorted points, a matrix to be filled with the resulting points and an intrinsic camera calibration matrix.

undistortPoints(leftLanePointMatrix, undistortedLeftLanePointMatrixNormalised, intrinsic_calibration_matrix, cv::noArray());

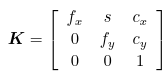

The camera calibration matrix looks like this:  Source: “Richard Szeliski Computer Vision Algorithms and Applications” ISBN 978-1-84882-934-3

Source: “Richard Szeliski Computer Vision Algorithms and Applications” ISBN 978-1-84882-934-3

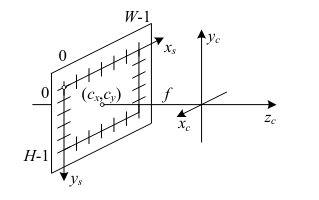

It contains the parameters fx (horizontal focal length), fy (vertical focal length) and the centerpoint coordinates (cx,cy). s is the skew which for our purposes is assumed to be the ideal value of 0. In the following graphic you can see the individual parameters visualised:  Source: “Richard Szeliski Computer Vision Algorithms and Applications” ISBN 978-1-84882-934-3

Source: “Richard Szeliski Computer Vision Algorithms and Applications” ISBN 978-1-84882-934-3

With this matrix you can calculate a homogeneous vector representing a ray with origin in the center of the camera sensor and pointing to the correct point in the image plane. Mathematically you achieve this by multiplying a homogeneous vector with the values of the imagepoint u and v coordinates and the inverse of the intrinsic calibration matrix.

And this is exactly what cv::undistortPoints. It can also account for the distortion of the lens, if you pass the distortion coefficients in the 4th parameter.

Next is to revert the rotation caused by the camera not being aligned with the world coordinate system. That happens here:

float norm[] = {(*i).x, (*i).y, 1};

cv::Mat rotated_ray = cv::Mat_<float>(1, 3, norm) * rotation_matrix.inv();

Only part left is to compensate the translation. The translation is a vector describing the offset of the camera relative to the center of our world. In our case this means the offset relative to the center of the rear axis in our research car.

Because we have a homogeneous vector after the rotation, we cant directly translate it with our transform vector (the vector containing the offset values). We need to first bring the values in the xy-plane because our third value in the rotated ray is not semantically the z-coordinate, but a scale factor which was required for previous operations.

To achieve this we divide the homogeneous vector with the artificial value:

cv::Mat rotated_normalised_ray = rotated_ray / rotated_ray.at<float>(2);

And then we multiply the x and y coordinates with the z-coordinate of the transform vector before correcting the translation:

float xGround = transform_matrix.at<float>(2) * rotated_normalised_ray.at<float>(0) + transform_matrix.at<float>(0);

float yGround = transform_matrix.at<float>(2) * rotated_normalised_ray.at<float>(1) + transform_matrix.at<float>(1);

At that point we have projected the point and can pass the information to the visualization:

lane_msgs::msg::LaneMarkingProjected lmp;

lmp.x = -xGround;

lmp.y = yGround;

lmp.z = 0;

combined_lanes.markings_right.push_back(lmp);

Task 3 solution (lane_detection_visualization.cpp)

The visualization is relatively straight forward.

#define ubyte unsigned char

#define uword unsigned short int

#define BOOST_BIND_NO_PLACEHOLDERS

#include <chrono>

#include <memory>

#include "lane_msgs/msg/lane_marking_projected_array_both.hpp"

#include "visualization_msgs/msg/marker_array.hpp"

#include "visualization_msgs/msg/marker.hpp"

#include "geometry_msgs/msg/pose.hpp"

#include <ament_index_cpp/get_package_share_directory.hpp>

#include <stdlib.h>

#include "rclcpp/rclcpp.hpp"

#include <vector>

using std::placeholders::_1;

class lane_detection_visualization : public rclcpp::Node

{

public:

lane_detection_visualization()

: Node("lane_detection_visualization")

{

pub_marker = create_publisher<visualization_msgs::msg::Marker>("/visual_feedback", 10);

sub_markings = create_subscription<lane_msgs::msg::LaneMarkingProjectedArrayBoth>("/lane_markings_left_right_projected", 10, std::bind(&lane_detection_visualization::laneMarkingCallback, this, _1));

}

void laneMarkingCallback(lane_msgs::msg::LaneMarkingProjectedArrayBoth::SharedPtr msg)

{

visualization_msgs::msg::Marker ma_left_lane;

visualization_msgs::msg::Marker ma_right_lane;

ma_left_lane.header.stamp = this->now();

ma_left_lane.header.frame_id = "map";

ma_left_lane.id = 0;

ma_left_lane.type = visualization_msgs::msg::Marker::LINE_STRIP;

ma_left_lane.action = visualization_msgs::msg::Marker::ADD;

ma_left_lane.pose.position.x = 0;

ma_left_lane.pose.position.y = 0;

ma_left_lane.pose.position.z = 0;

ma_left_lane.pose.orientation.x = 0;

ma_left_lane.pose.orientation.y = 0;

ma_left_lane.pose.orientation.z = 0;

ma_left_lane.pose.orientation.w = 1;

ma_left_lane.color.a=1.0;

ma_left_lane.color.r=1.0;

ma_left_lane.color.g=0;

ma_left_lane.color.b=0;

ma_left_lane.scale.x=0.1;

ma_left_lane.scale.y=0.1;

ma_left_lane.scale.z=0.1;

for (int i = 0; i < msg->markings_left.size(); i++)

{

geometry_msgs::msg::Point point;

point.x = msg->markings_left[i].x;

point.y = msg->markings_left[i].y;

point.z = msg->markings_left[i].z;

ma_left_lane.points.push_back(point);

}

ma_right_lane.header.stamp = this->now();

ma_right_lane.header.frame_id = "map";

ma_right_lane.id = 1;

ma_right_lane.type = visualization_msgs::msg::Marker::LINE_STRIP;

ma_right_lane.action = visualization_msgs::msg::Marker::ADD;

ma_right_lane.pose.position.x = 0;

ma_right_lane.pose.position.y = 0;

ma_right_lane.pose.position.z = 0;

ma_right_lane.pose.orientation.x = 0;

ma_right_lane.pose.orientation.y = 0;

ma_right_lane.pose.orientation.z = 0;

ma_right_lane.pose.orientation.w = 1;

ma_right_lane.color.a=1.0;

ma_right_lane.color.r=1.0;

ma_right_lane.color.g=0;

ma_right_lane.color.b=0;

ma_right_lane.scale.x=0.1;

ma_right_lane.scale.y=0.1;

ma_right_lane.scale.z=0.1;

for (int i = 0; i < msg->markings_right.size(); i++)

{

geometry_msgs::msg::Point point;

point.x = msg->markings_right[i].x;

point.y = msg->markings_right[i].y;

point.z = msg->markings_right[i].z;

ma_right_lane.points.push_back(point);

}

pub_marker->publish(ma_left_lane);

pub_marker->publish(ma_right_lane);

visualization_msgs::msg::Marker marker;

marker.header.frame_id = "map";

marker.header.stamp = this->now();

marker.id = 911;

marker.type = visualization_msgs::msg::Marker::MESH_RESOURCE;

marker.action = visualization_msgs::msg::Marker::ADD;

marker.mesh_resource = "file://"+ament_index_cpp::get_package_share_directory("lane_detection_visualization")+"/resources/low_poly_911.dae";

marker.pose.position.x = 0;

marker.pose.position.y = 0;

marker.pose.position.z = 0.5;

marker.pose.orientation.x = 0.0;

marker.pose.orientation.y = 0.0;

marker.pose.orientation.z = 1.0;

marker.pose.orientation.w = 0.0;

marker.scale.x = 1;

marker.scale.y = 1;

marker.scale.z = 1;

marker.color.b = 1.0;

marker.color.g = 1.0;

marker.color.r = 1.0;

marker.color.a = 1.0;

pub_marker->publish(marker);

}

private:

std::shared_ptr<rclcpp::Publisher<visualization_msgs::msg::Marker>> pub_marker;

std::shared_ptr<rclcpp::Subscription<lane_msgs::msg::LaneMarkingProjectedArrayBoth>> sub_markings;

};

int main(int argc, char *argv[])

{

rclcpp::init(argc, argv);

auto node = std::make_shared<lane_detection_visualization>();

rclcpp::Rate loop_rate(1/100.0);

while (rclcpp::ok())

{

rclcpp::spin_some(node);

}

rclcpp::shutdown();

return 0;

}

You have to create 2 Marker messages and fill them properly:

ma_left_lane.header.stamp = this->now();

ma_left_lane.header.frame_id = "map";

ma_left_lane.id = 0;

ma_left_lane.type = visualization_msgs::msg::Marker::LINE_STRIP;

ma_left_lane.action = visualization_msgs::msg::Marker::ADD;

ma_left_lane.pose.position.x = 0;

ma_left_lane.pose.position.y = 0;

ma_left_lane.pose.position.z = 0;

ma_left_lane.pose.orientation.x = 0;

ma_left_lane.pose.orientation.y = 0;

ma_left_lane.pose.orientation.z = 0;

ma_left_lane.pose.orientation.w = 1;

ma_left_lane.color.a=1.0;

ma_left_lane.color.r=1.0;

ma_left_lane.color.g=0;

ma_left_lane.color.b=0;

ma_left_lane.scale.x=0.1;

ma_left_lane.scale.y=0.1;

ma_left_lane.scale.z=0.1;

To get the line visualization, you need to make use of the “points” field. Fill it with the correct values like this:

for (int i = 0; i < msg->markings_left.size(); i++)

{

geometry_msgs::msg::Point point;

point.x = msg->markings_left[i].x;

point.y = msg->markings_left[i].y;

point.z = msg->markings_left[i].z;

ma_left_lane.points.push_back(point);

}

Bonus task solution

Use the MESH_RESOURCE type of marker and just pass the file you want.

visualization_msgs::msg::Marker marker;

marker.header.frame_id = "map";

marker.header.stamp = this->now();

marker.id = 911;

marker.type = visualization_msgs::msg::Marker::MESH_RESOURCE;

marker.action = visualization_msgs::msg::Marker::ADD;

marker.mesh_resource = "file://"+ament_index_cpp::get_package_share_directory("lane_detection_visualization")+"/resources/low_poly_911.dae";

marker.pose.position.x = 0;

marker.pose.position.y = 0;

marker.pose.position.z = 0.5;

marker.pose.orientation.x = 0.0;

marker.pose.orientation.y = 0.0;

marker.pose.orientation.z = 1.0;

marker.pose.orientation.w = 0.0;

marker.scale.x = 1;

marker.scale.y = 1;

marker.scale.z = 1;

marker.color.b = 1.0;

marker.color.g = 1.0;

marker.color.r = 1.0;

marker.color.a = 1.0;

pub_marker->publish(marker);

评论区